UX and Design

How to implement successful A/B tests on your website and product

26 May 2022 • 8 min read

Done properly, A/B testing is a powerful tool that can help you optimise your website to get the best out of your current traffic and improve your conversion rate. So how do you do A/B testing properly? Karine Tardivel, UX & Design Principal at AND Digital explains.

What is A/B testing?

A/B testing is the process of comparing two versions of a web page to determine which one improves a given conversion goal. We do this by splitting the traffic into segments and comparing the results to understand which version of the page performs better.

A/B testing helps to make data-driven decisions. It is a safe way of testing as it helps understand what works and what doesn't before implementation.

The benefits of A/B testing

There are many benefits of implementing A/B testing on your website or app:

1. Improve user experience

Every user who reaches your website has a goal in mind (e.g. buying a product, gaining information about a service, browsing etc). If users cannot achieve their goals due to poor user experience, they will leave your website. They may never return.

2. Get the most of your existing traffic

Getting new, quality users on your site will cost time and money. A/B testing helps make the most of your existing traffic as the smallest change can make a significant increase in conversions.

3. Make low-risk changes

A/B testing helps make incremental changes instead of redesigning an entire page. This alone can reduce the risk of adversely affecting your current conversion rate. For example, you want to change the way you display your prices because you believe they are too small to read. A/B testing will help you understand the impact of making any changes before deploying them across your site. Implementing changes without testing them first is like playing a game of poker – you never quite know how people will react to your move. Often, companies spend time and money designing and developing changes to later find they have negatively affected their conversion rate. A/B testing helps to remove this risk.

4. Make design changes

Design trends change and brands will often want to evolve their design language.

Yet from a simple text button change to a full page redesign, the decision to put in place new designs should always be data-driven, and it is A/B testing that provides that data.

Common things you should A/B test:

Forms

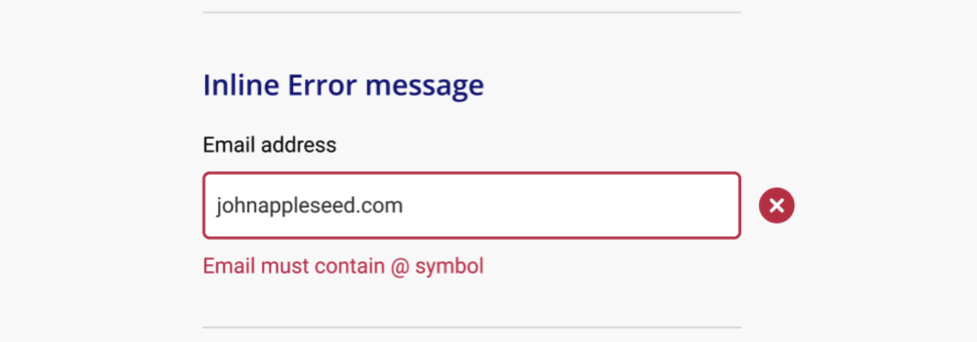

Forms are an important part of the customer experience. They usually belong to the conversion funnel and can therefore be a sticky point in the customers' journey. For example, asking for personal information too early in the user journey leads to drop-offs. A common example on an eCommerce website would be where users have to create an account to checkout. In this case, creating a "checkout as guest" option would remove that barrier. Building an A/B test around this would be beneficial. Another common issue, on the user experience side, are sites that use forms with no inline validation. This means that users have to click "next" to see if they've made any mistakes or missed anything. This is a common usability issue. Users want to progress through forms as fast as possible. The easier you make it, the better your conversion rate.

Make sure your forms are well optimised by conducting usability research using the methods in the next section.

Navigation

A structured navigation is crucial to ensuring a good user experience. Using clear labelling and common patterns will help this. Use techniques such as card sorting or tree testing to uncover your users’ pain points prior to implementing your A/B tests.

Source: Optimal workshop

For example, while working on a car reseller website, we believed that their navigation language was confusing.

We tree tested the navigation and found that the label "my garage", which was used to login or create an account, was where users expected to be able to book their MOT. We wouldn't have uncovered their users' mental models without running some prior testing.

After understanding your users’ pain point(s), you can put in place the changes as A/B tests to verify your assumptions.

CTA (call to action)

CTAs guide your users throughout their journey on your site and are key to conversion. A/B testing enables you to test their placement, copy, size, and colour and figure out which variation has the most impact.

Design & layout

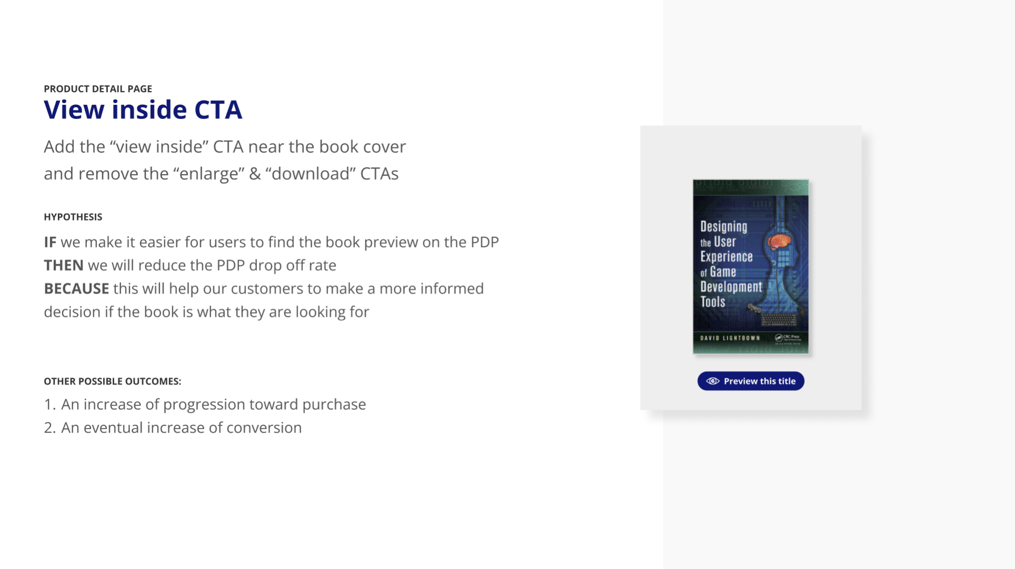

It can be tricky to know what design elements to prioritise on a page to help a user complete their goals. A/B testing can help solve these issues. For example, the product description page on an eCommerce website is an important page. It contains all the information users need to make an informed decision. We recently helped a book retailer with A/B testing. When we carried out some usability tests we uncovered that, while on the product description page, their users wanted to see a sample of the book. The page did have that feature, but most users were missing it as it wasn't positioned where they expected to find it. We added this variant to their A/B test (see image below) to verify that the changes would generate an increase in progression toward purchase. Other important pages which will need attention are the home page and the landing pages that get the most traffic.

Social proof (trust)

Social proofs such as customer and expert reviews, celebrity endorsements, awards and so on, help your users to gain trust in your website and brand.

As an example, on the book retailer website, we found the majority of customers we interviewed didn't trust the site and wouldn't buy from it. A lack of reviews, clunky functionalities, poor image qualities and archaic payment methods were the reasons for it.

The product pages did have reviews but they were at the very bottom of the page and were often missed. To address these issues we created a variant that added review stars under the book titles (see below).

When integrating social proofs, experiment with different types, placement and layout to understand which combination brings the most impact.

Webinar: Understanding New Digital Behaviours Through User Centred Design - Register Here

In the last three years, digital behaviours have changed dramatically. That's why it's more important than ever for businesses to understand digital consumer behaviour and design their products and services accordingly. User centred design (UCD) can help you do just that. At this event, we’ll help you understand how putting the user at the centre of your product development process can create better products and services and help you stay ahead of the competition.

6 steps for building an A/B test

1. Research

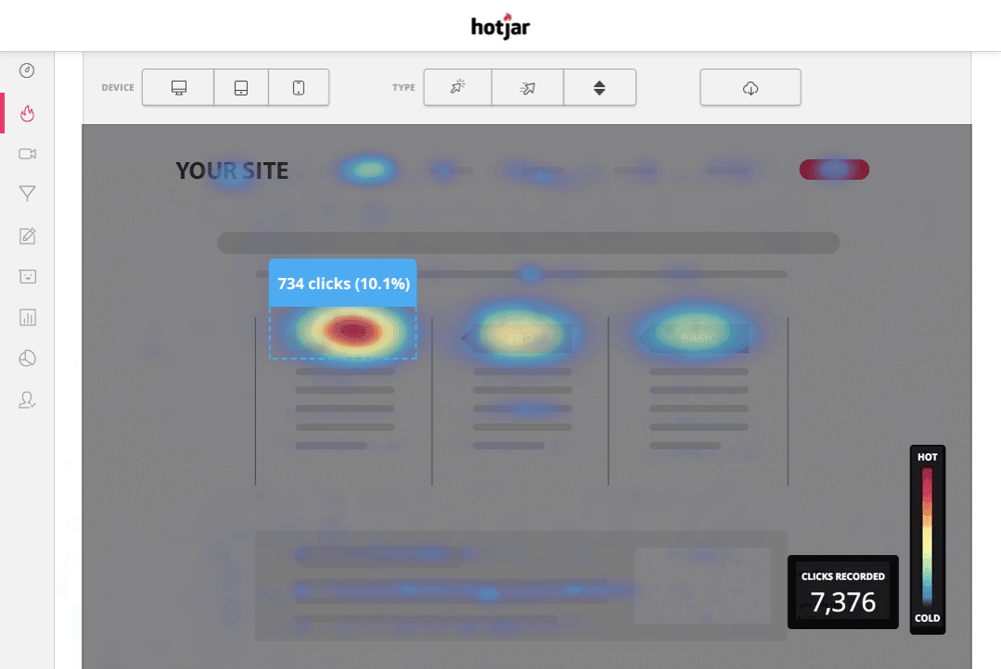

Before you build a test, you should understand your users’ needs, pain points, and the site performance. To get a good picture you should combine quantitative and qualitative data. Qualitative data can come from looking at your website analytics. These can give you important metrics such as numbers of visitors, conversion rate, returning visitors, most visited pages and more. Use these metrics as benchmarks and keep them handy to revisit and compare results later. Then, collect qualitative data using tools such as Hotjar and Content Square to create heatmaps and set up recordings. Heatmaps reveal what areas on your website have the most or least interactions. This can help you prioritise content. Recordings are valuable to see how users navigate your site. They also help to identify usability issues. To go even further in your quantitative research, you could set up usability testing. Recruit current and potential customers to understand the reasons behind your website metrics.

Source: Heatmaps from Hotjar

2. Create hypotheses

Once you’ve identified the main problems with your website, you can start generating ideas and hypotheses which help to explain and solve them. During this phase, you should also look at setting a success metric for each hypothesis. For example, you’ve identified that your current users aren’t aware of your free delivery offer which is an important user need. Your hypothesis for this scenario could look like this:

IF we clearly show the delivery offer on the product description pages

THEN we will see an increase of “add to basket” items

BECAUSE this will build trust with customers and remove the frictions and questions around delivery

Don’t forget to list any other metrics that could be seen as a result of this change. In this instance, the other possible outcomes could be:

1. An increase in progression toward purchase

2. A reduction of drop-offs on the product description page

3. An eventual increase of conversion

3. Design the experiments

The next step in is to design variants based on each of your hypotheses. A variant is an alternative version of your current version which contains the changes. You can also decide to test against multiple variants, known as C variants.

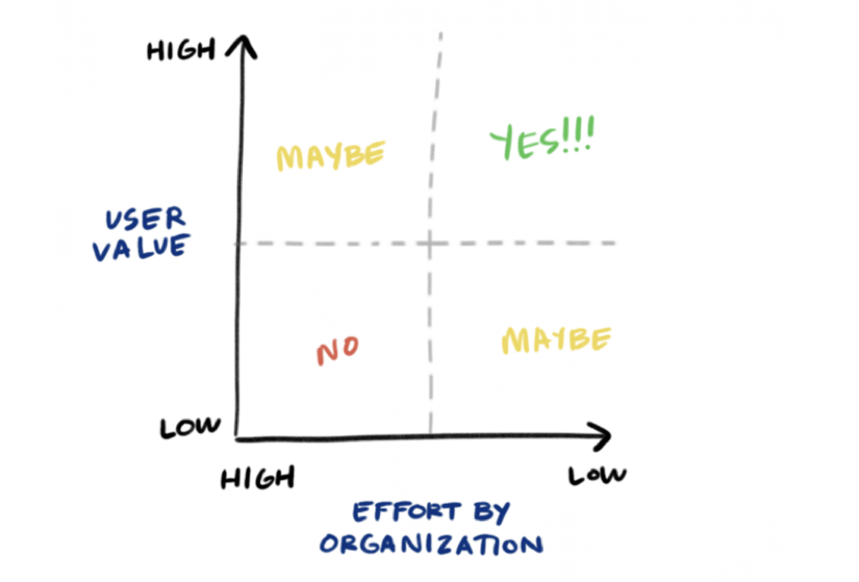

4. Prioritise the experiments

Now you have your hypothesis and your design variants, you should prioritise your tests using the impact VS value matrix. Do this in a workshop with your product team, which should ideally include a developer, product owner, data scientist, and a user experience designer. Having these disciplines in the room enables you to realistically prioritise the tests.

Source: Neilson Norman Group

5. Run the experiment

Now you’ve reached this stage, you need to make sure the test runs long enough to reach statistical significance. You can calculate the test duration by taking into consideration your average daily and monthly visitors, estimated conversion rate, number of variations (including control), percentage of visitors included in the test, and so on.

6. Analyse results and implement changes

Analyse the results to define your experiment winner. This is a crucial step of the journey. Consider metrics such as percentage increase, confidence level, direct or indirect impact on the conversion rate etc. If you have a clear winner, deploy the changes and keep measuring the impact after implementation.If the test has failed, gather insights using tools such as Hotjar to record sessions while the test is running, then create another variant based on your conclusion and test it again.

Conclusion

A/B testing requires time and dedication, from conducting user research to creating a backlog (i.e. a strategic list) of variants to prioritise them. Done properly, A/B testing is a powerful tool that will help you optimise your website to get the best out of your current traffic and improve your conversion rate.

Sources:

https://www.optimizely.com/optimization-glossary/ab-testing/

https://vwo.com/ab-testing/#free-trial

https://www.brightedge.com/glossary/benefits-recommendations-ab-testing